{{active_subscriber_count}} active subscribers.

S2N Spotlight

I used to like to write about philosophy or psychology on a Friday. Today is an absolutely gorgeous summer’s day in Sydney. Today I am going to write a little about AI, but not about the valuations of the industry, rather a little overview of how these wonderful LLMs (large language models) work. This is a low-tech explanation about a high-tech subject.

The trigger for today’s spotlight was the results I came across from The Tiny Recursion Model (TRM), developed by Samsung researcher Alexia Jolicoeur-Martineau. It is a neural network with just 7M parameters that reportedly outperforms much larger models like GPT-4 on some complex reasoning tasks.

Ok, let me pause right there. What the #$!… is he talking about, 7 million parameters? The parameters are the tuning knobs — the weights the model adjusts during training so it can predict, statistically, what word is most likely to come next. If you think 7 million is a lot, it isn’t that big. ChatGPT-4 and other top LLMs have hundreds of billions of parameters. That means they have to do a huge, and I mean HUGE, amount of computation to make a simple forecast. Picture a lot of computers running lots of calculations across hundreds of billions of parameters to predict just the next token. We will assume a token is a word for today.

Now for the next big lesson in how these LLMs think.

They “think” of one word in advance. They are not “thinking” about the whole sentence structure and the key word to drop in twenty words’ time like we like to think. Instead, they are thinking, 'What is the most probable next word likely to be based on my learning of how people have reacted to the context up to that point.’ To build a reliable predictor of the next word, it relies on a statistical model that has been trained on many words and sentences. You grooving me, bruv (not sure why I did that 😎). Now you know why they need so many billions of knobs to make a coherent sentence, let alone draft your resignation letter or your newsletter to sound smart. [I take full responsibility for my newsletter both good or bad.]

Bringing things back to the Tiny Recursive Model, how I started this newsletter. The investment and trading world was long dominated by the big end of town who had access to the biggest and most expensive hardware and software. It felt like an arms race where those with the big bucks were destined to win over the smart and underfunded squirts.

There is nothing I love more than when necessity becomes the mother of invention and smart thinking replaces bloated, lazy thinking. I am hoping this serves as inspiration to those who like to think smart and challenge the status quo. Did I just get philosophical? Oops, it just happened. TGIF.

S2N Observations

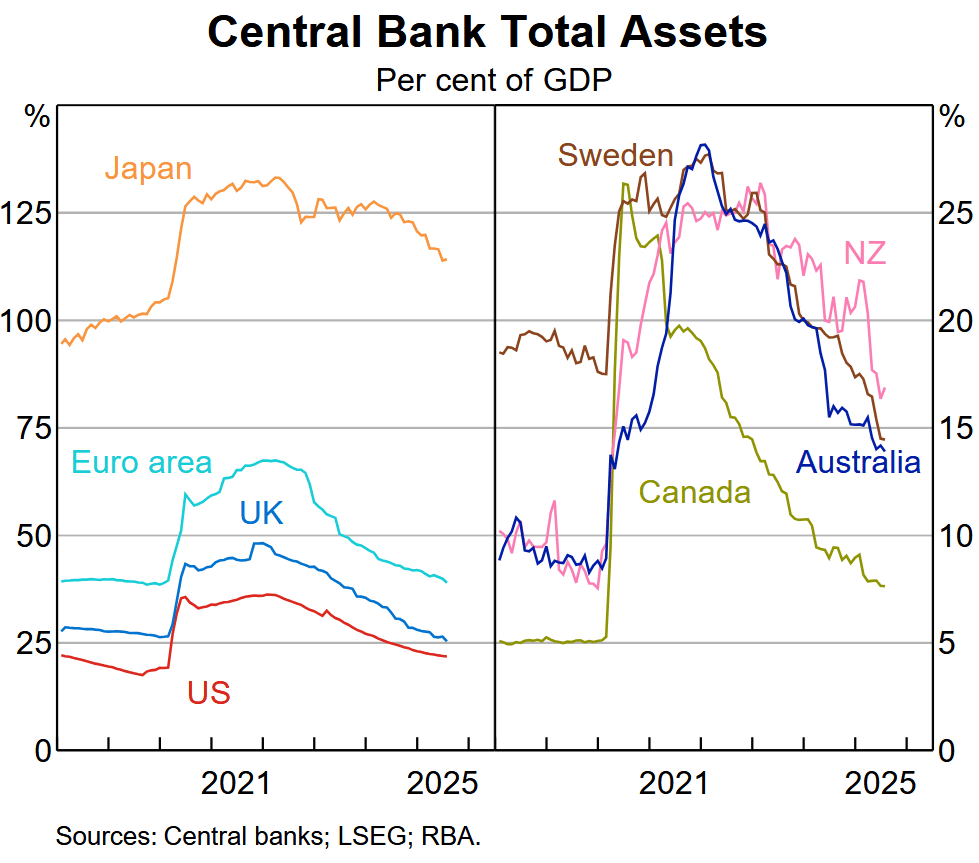

Yesterday I spotlighted the central banks’ quantitative tightening (QT) with light reference to the size of their balance sheets to their respective GDPs. Occasionally life serves you lemonade, and today quite by chance I stumbled on a chart by the Reserve Bank of Australia.

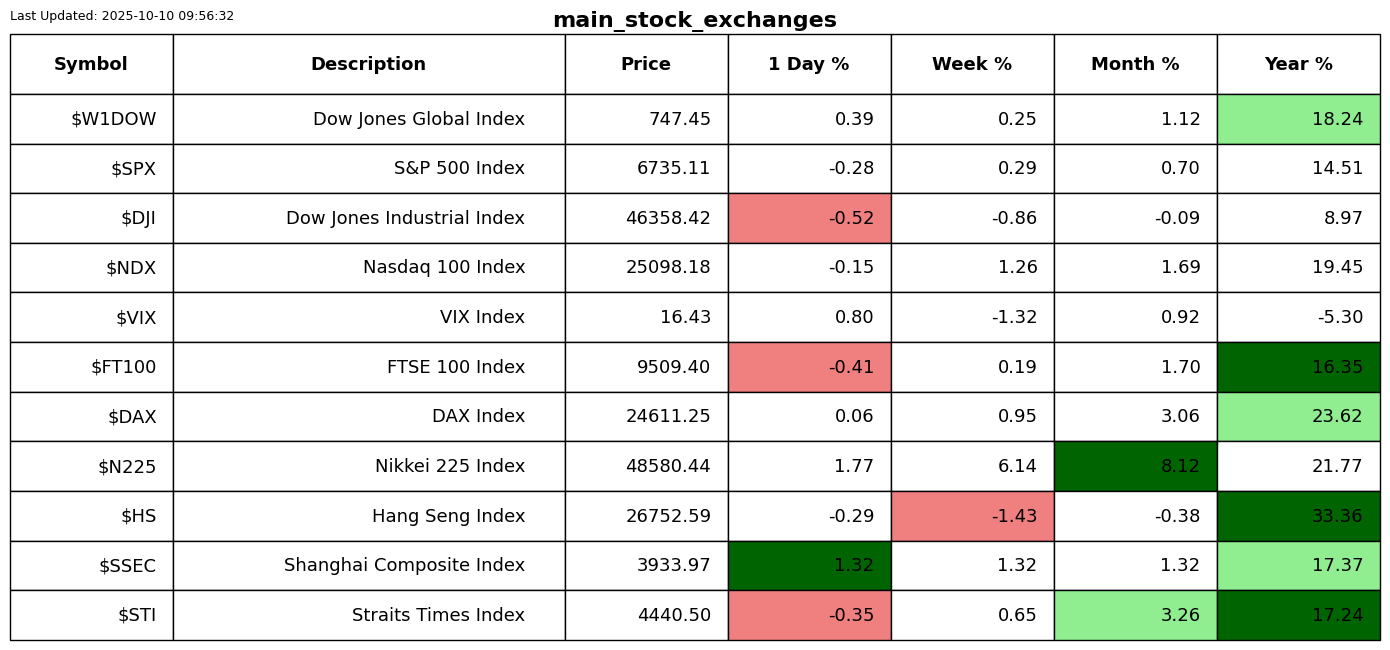

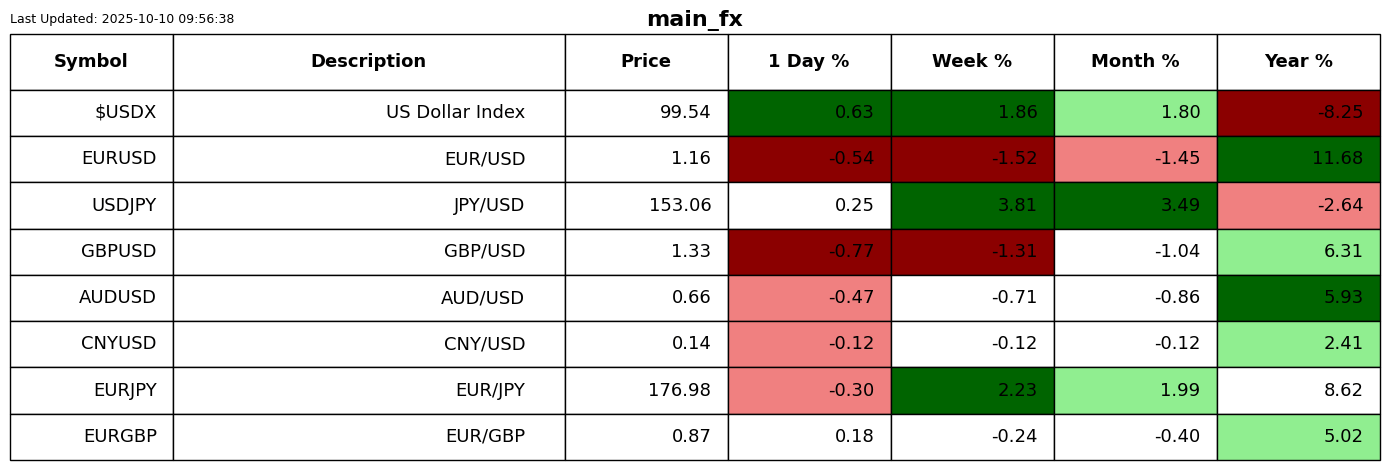

The left side showcases the big banks, and the right side the smaller banks. The reason why they are split is because the size of the big banks’ balance sheets relative to their GDP is “off the charts.” Japan is by far the most aggressive, and one has to anticipate a world of pain for its currency in the years to come.

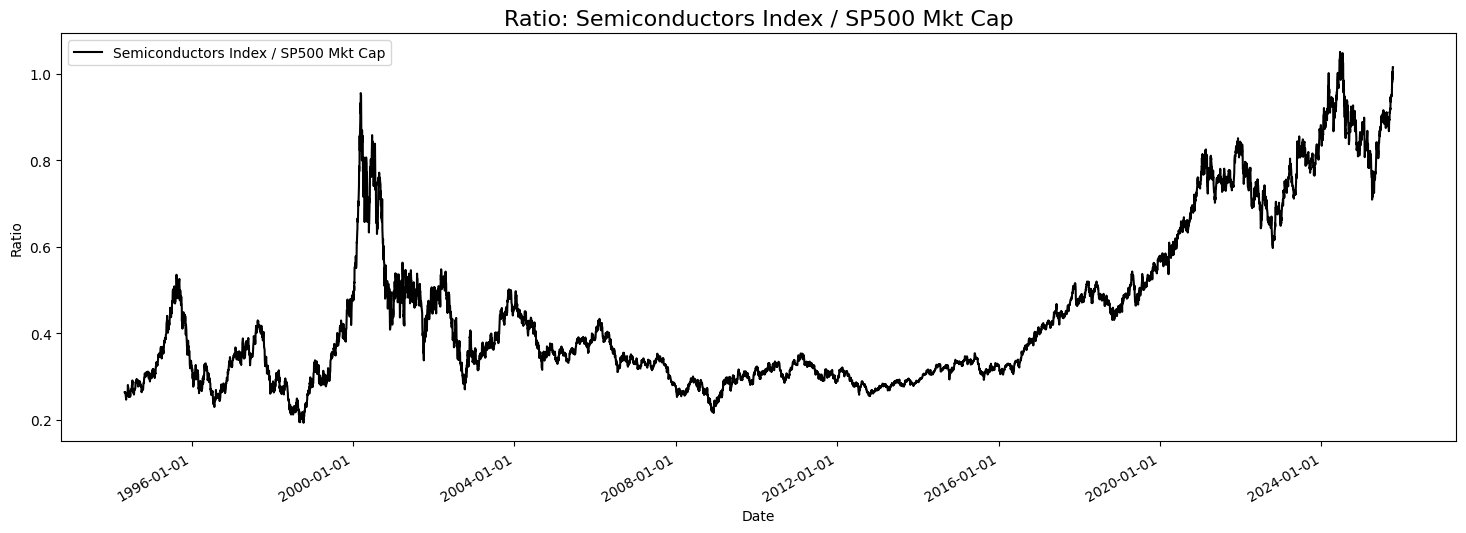

Nvidia just made new ATHs; the whole AI future funding is being sold on promises, not money, as the companies making the promises don’t have the money. I was playing around with the semiconductor index, and this ratio stood out as significant. I thought the previous ratio high and subsequent sell-off was the “one”. I still think it was the one. Look out for air pockets; there are plenty about.

S2N Screener Alert

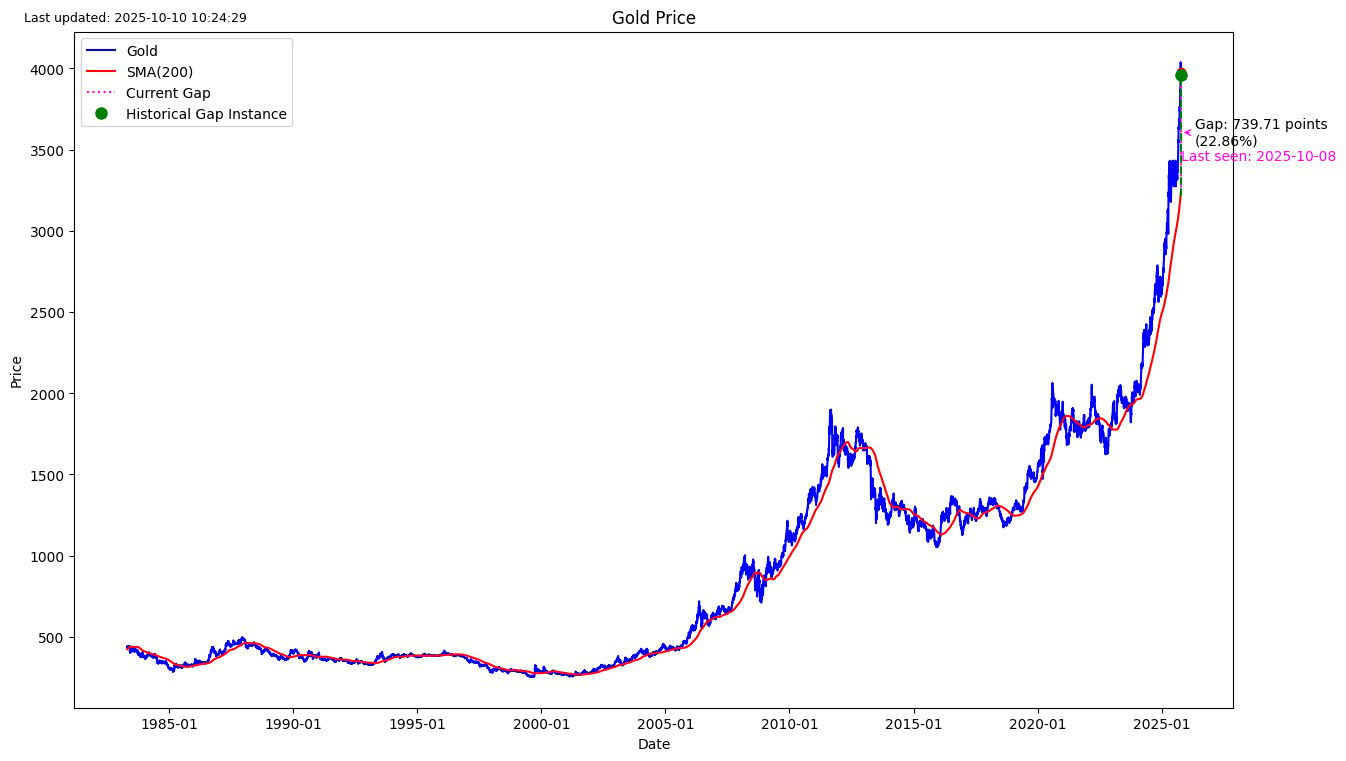

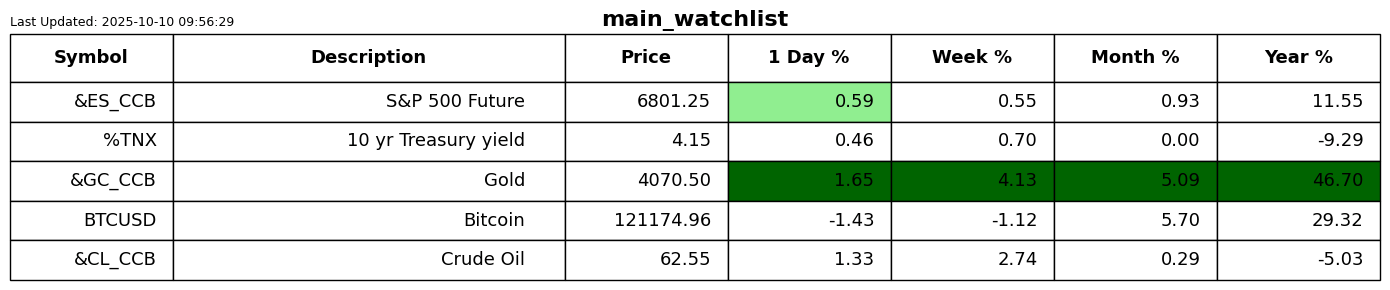

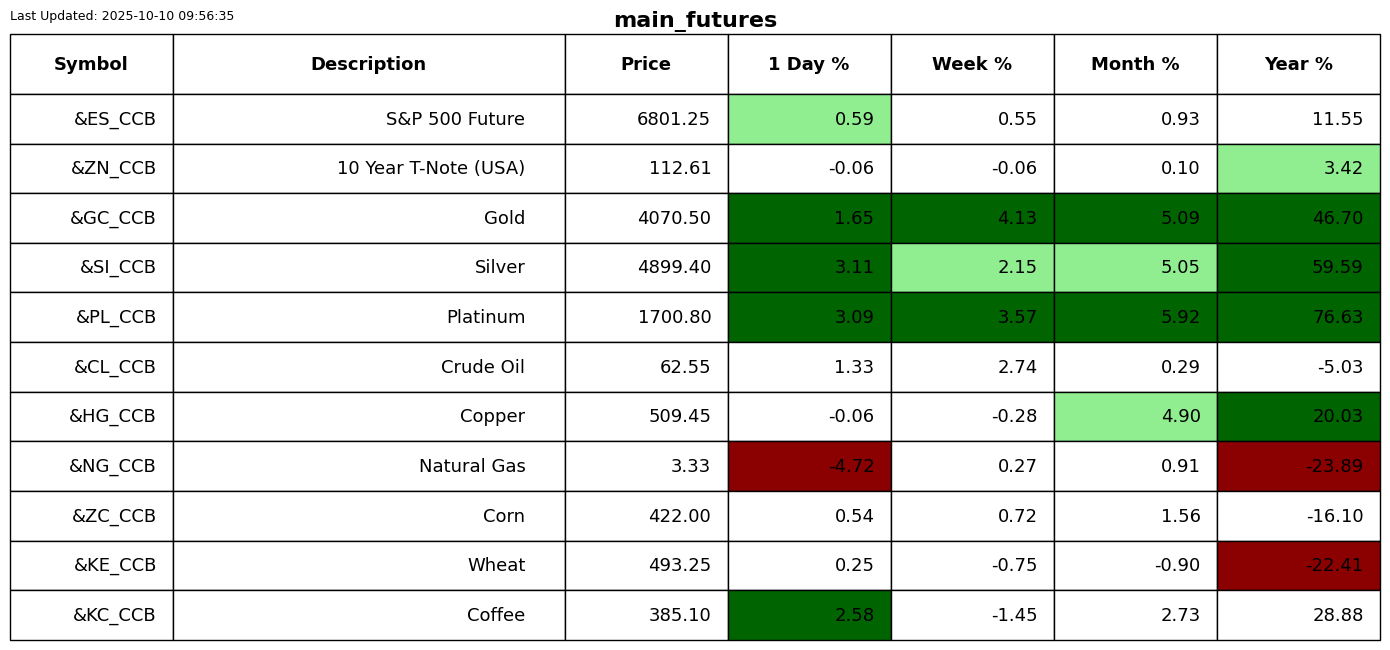

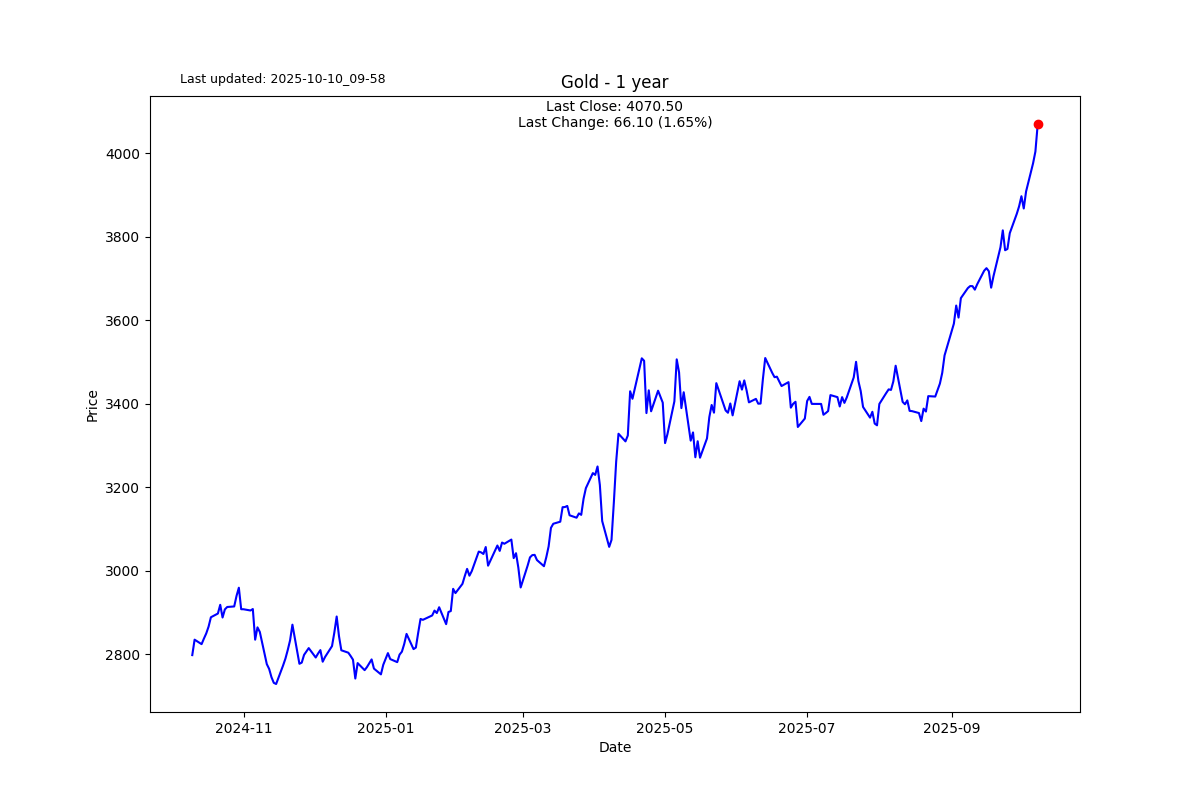

Gold has never been so far above its 200-day moving average. I hate to be a party pooper, but I am always on alert for extremes. I was going to quote Aristotle on his writings about the doctrine of the mean, but today I thought of this quote from the author of the Hippocratic treatise On Breaths which encapsulates so well my thoughts on extremes. Aristotle did in fact incorporate the treatise in his writings on moderation. In case you didn’t realise, I am a huge fan of Aristotle and Maimonides for their thinking about the dangers from extremes.

"Opposites are cures for opposites. Medicine is in fact addition and subtraction, subtraction of what is in excess, addition of what is wanting."

If someone forwarded you this email, you can subscribe for free.

Please forward it to friends if you think they will enjoy it. Thank you.

S2N Performance Review

S2N Chart Gallery

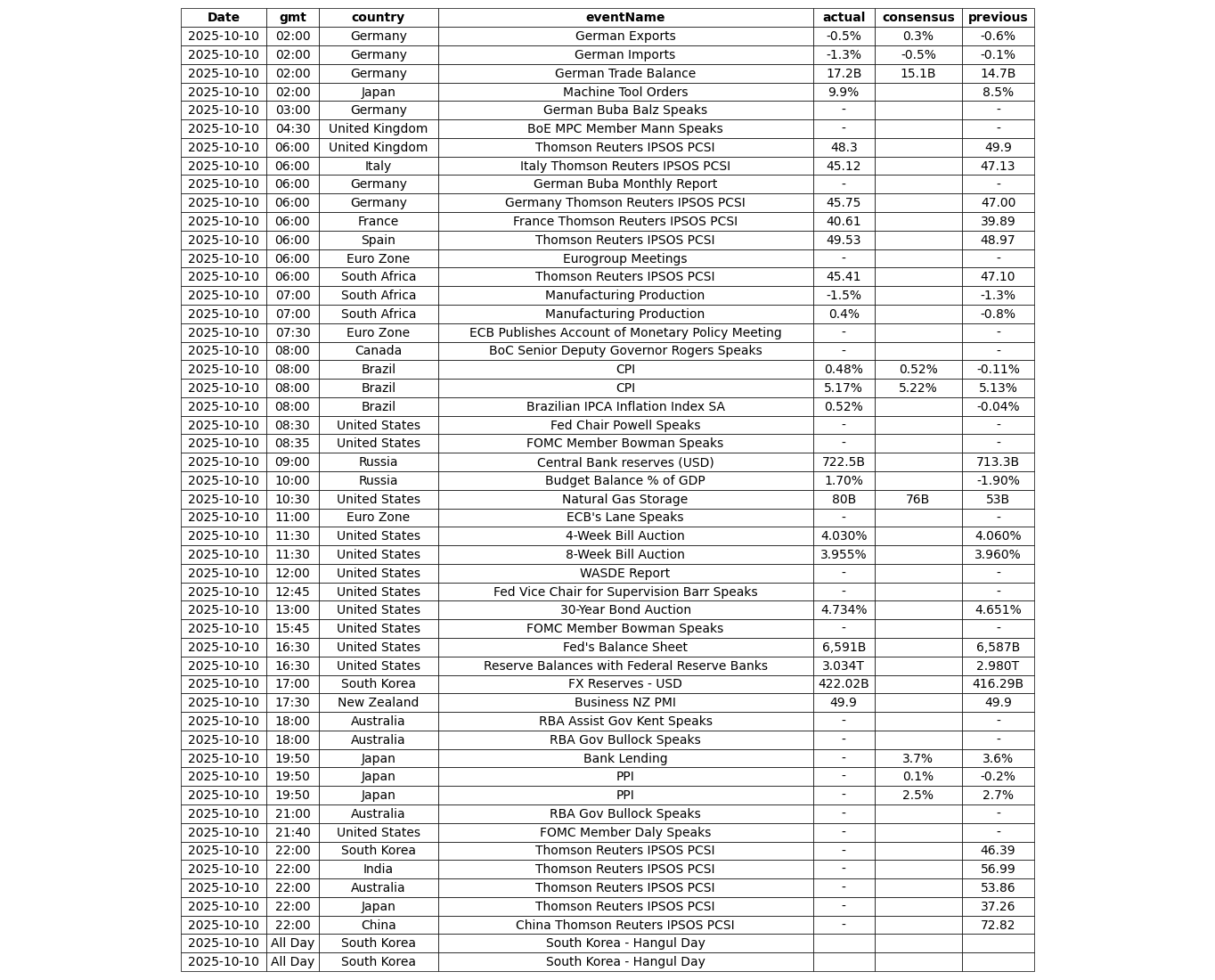

S2N News Today